Cursor is actively testing the limits of autonomous coding agents, and early results are already influencing how the company views long-duration software development. After making OpenAI’s GPT-5.2 available on its platform, Cursor disclosed that the model demonstrated significantly higher reliability than Anthropic’s Claude Opus 4.5 when assigned complex, extended coding workflows. The findings suggest that newer AI models may be better suited for sustained, multi-step engineering tasks that traditionally require coordinated human teams.

The comparison comes from an ambitious internal experiment in which Cursor attempted to build a web browser from the ground up using autonomous agents. According to CEO Michael T. Truell, the rendering engine was written entirely in Rust and designed to handle core browser components, including HTML parsing, CSS layout, text shaping, and even a custom JavaScript virtual machine. “It kind of works,” Truell wrote, noting that while the browser is nowhere near the maturity of engines such as WebKit or Chromium, the team was struck by how quickly and accurately it could render simple websites.

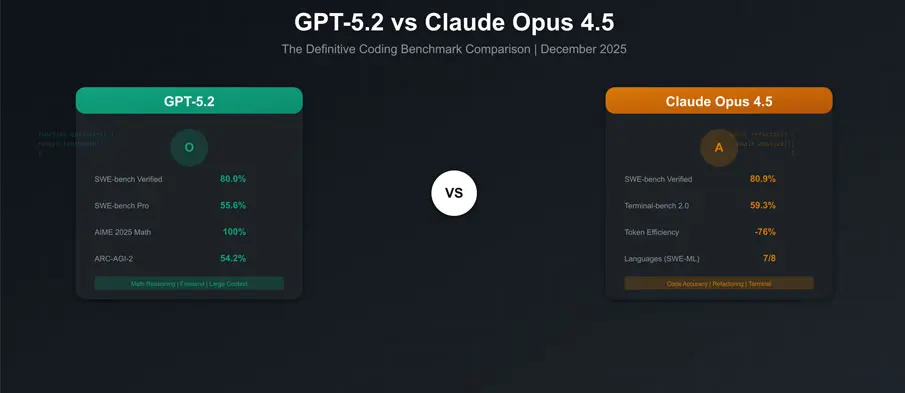

Cursor explained in a research blog post that the project was intentionally designed to test whether autonomous agents could sustain focus and execution over long periods—work that would normally take experienced engineering teams several months. “We found that GPT-5.2 models are much better at extended autonomous work: following instructions, keeping focus, avoiding drift, and implementing things precisely and completely,” the company said. In contrast, Cursor observed that Opus 4.5 “tends to stop earlier and take shortcuts,” making it less reliable for prolonged, end-to-end development efforts.

The experiment has broader implications for how AI-assisted software development may evolve. Long-running tasks, such as building foundational infrastructure or complex systems, have historically been considered out of reach for autonomous agents due to issues like context loss, inconsistency, and incomplete execution. Cursor’s findings suggest that these limitations may be narrowing as models improve in reliability and instruction-following over extended timelines.

To encourage further exploration and transparency, Cursor has made the browser’s source code publicly available on GitHub. The release offers developers and researchers a concrete example of what autonomous coding agents can already achieve, and a glimpse into a future where AI systems take on increasingly large and complex software projects with minimal human intervention.