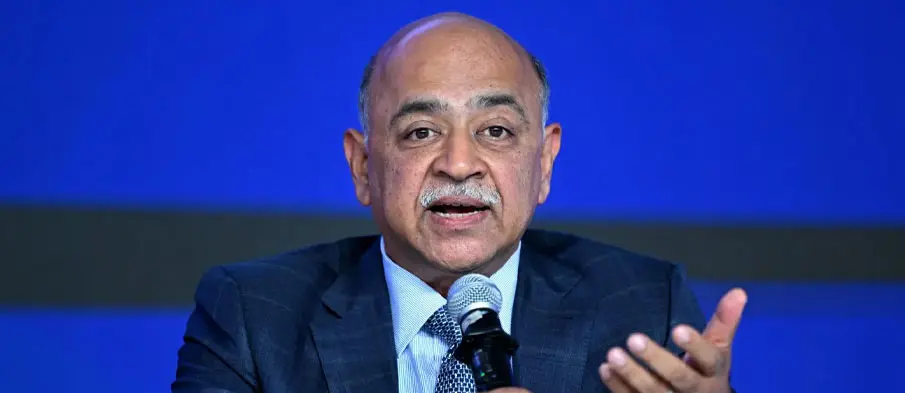

IBM CEO Arvind Krishna has raised serious questions about whether the massive capital outlays currently being funneled into artificial general intelligence (AGI) infrastructure can ever deliver adequate financial returns. Speaking on The Verge’s Decoder podcast, Krishna argued that the industry’s push to build AI data centers at unprecedented scale places companies on a trajectory toward $8 trillion in cumulative capital commitments — a level that would demand about $800 billion in annual profit just to cover the cost of capital.

Krishna’s caution comes at a moment when the world’s largest technology companies are publicly announcing multi-gigawatt AI campuses, each aiming to outspend the others in the race to build the compute backbone for future AGI systems. His estimate that it takes $80 billion to fully equip a one-gigawatt AI data center has become central to the debate. With industry plans already pointing to around 100 gigawatts of intended AI capacity, Krishna noted that “taken together, public and private announcements point to roughly one hundred gigawatts of currently planned capacity dedicated to AGI-class workloads.” At $80 billion per GW, the investment lands squarely at the $8 trillion mark.

A key element of Krishna’s argument revolves around hardware depreciation. AI accelerators are typically written down over five years, and with rapid architectural changes, he believes enterprises have little choice but to refresh entire fleets on that same cycle. As he put it: “You’ve got to use it all in five years because at that point, you’ve got to throw it away and refill it.” Krishna stressed that depreciation is the factor most investors fail to fully appreciate, as the need to completely replace hardware every few years creates a compounding capex burden.

His remarks echo broader skepticism in financial circles. Investors like Michael Burry have similarly questioned whether hyperscalers can realistically extend the useful life of GPUs when escalating model complexity forces faster hardware turnover. Krishna also expressed doubt that large language model architectures, in their current form, are on track to reach AGI, placing their probability of doing so between zero and 1% unless new forms of knowledge integration emerge.

Despite these concerns, Krishna emphasized that today’s generative AI systems will still deliver meaningful productivity gains for enterprises. However, he warned that companies pursuing massive multi-gigawatt AI campuses must show returns that justify the unprecedented scale of investment — a challenge he believes remains unresolved given current cost structures and refresh cycles.